Tutorial: llmPrompt Back End

Cover Page

All the tutorials and projects in this course require access to a back-end server as set up in this tutorial. Instead of setting a separate server for each assignment, you may want to keep your server up and running for the duration of the term.

Server hosting

You need an Internet-accessible server, a server with a public IP address and

is online at all times (i.e., it cannot be running on your laptop). And your

server must be running the Ubuntu 24.04 operating system. You can use a real

physical host or a virtual machine on Amazon Web Services (AWS), Google Cloud

Platform (GCP), Microsoft Azure, AlibabaCloud, etc. as long as you can ssh

to an Ubuntu shell with root privileges. The setup presented here has been

verified to work on Ubuntu 24.04 hosted on AWS, GCP, a local Linux KVM and on

a physical host.

Hosting cost

If you decided to go with the free-tier server from AWS or GCP that has only

1 GB memory, the LLM models you can use with the tutorials are gemma3:270m

(~300 MB file storage, ~600 MB RAM) and qwen3:0.6b (~500 MB file storage,

~800 MB RAM). You likely won’t be satisfied interacting with these models,

but they are good enough to show that your solutions are working. If you

want to interact with more capable LLMs, you would need a server with more

resources, for which you would have to pay. Ollama’s model

examples

lists some models you can run on Ollama, along with their sizes. For a more

comprehensive list see Ollama’s model library.

For this course, we will assume you are running on a free-tier back end and

we will only provide instructions on how to set up a free-tier back end.

If you had used AWS in a different course and wanted to lower charges beyond your free allotment, you can use GCP in this course. Similarly, if you planned to host your team’s projects on your AWS free tier, you can set up a GCP instance for the tutorials.

Please click the relevant link to view the instructions to set up your host:

and return here to resume the server setup once you have your instance running.

WARNING![]() if you need help with your back-end server setup and code,

we can only help if your back end is running on AWS or GCP. For example, in the

past, students have been assigned compromised Digital Ocean IP addresses that were

blocked by the rest of the Internet. Unfortunately we are not able to help solve

this issue and students’ only recourse would be to setup a new server, with a clean

IP address, from scratch.

if you need help with your back-end server setup and code,

we can only help if your back end is running on AWS or GCP. For example, in the

past, students have been assigned compromised Digital Ocean IP addresses that were

blocked by the rest of the Internet. Unfortunately we are not able to help solve

this issue and students’ only recourse would be to setup a new server, with a clean

IP address, from scratch.

Updating packages

Login/ssh to your newly instantiated server and run the following:

server$ sudo apt update

server$ sudo apt upgrade

If you see *** System restart required *** when you ssh to your server,

immediately run:

server$ sync

server$ sudo reboot

Your ssh session will be ended at the server. Wait a few minutes for the system

to reboot before you ssh to your server again.

Clone your course tutorial repo

Clone your course tutorial GitHub repo so that you can push your back-end files for submission:

- First, on your browser, navigate to your course tutorial GitHub repo

- Click the green

Codebutton and copy the URL to your clipboard by clicking the clipboard icon next to the URL - Then on your back-end server:

server$ cd ~ server$ git clone <paste the URL you copied above> reactiveIf you haven’t, you would need to create a personal access token to use HTTPS Git.

If all goes well, your assignment repo should be cloned to ~/reactive.

Check that:

server$ ls ~/reactive

shows the content of your reactive git repo, including your llmprompt front end.

Installing Ollama

To force Ollama to run on CPU only, so as not to incur GPU charges, enter on your shell:

server$ sudo su

server# echo "CUDA_VISIBLE_DEVICES=-1" >> /etc/environment

server# exit

Then install curl so that we can grab Ollama:

server$ sudo apt install curl

server$ hash -r

server$ sudo curl -fsSL https://ollama.com/install.sh | sh

After completion of the last command above, you should and want to see the following warning:

WARNING: No NVIDIA/AMD GPU detected. Ollama will run in CPU-only mode.

Otherwise, you will be paying for GPU usage in addition to your instance usage.

After you’ve installed Ollama, pull the gemma3:270m

model to your Ollama:

server$ ollama pull gemma3:270m

As mentioned earlier, you likely won’t be satisfied with interaction with gemma3:270m (291 MB),

but it is good enough to show that your solutions work.

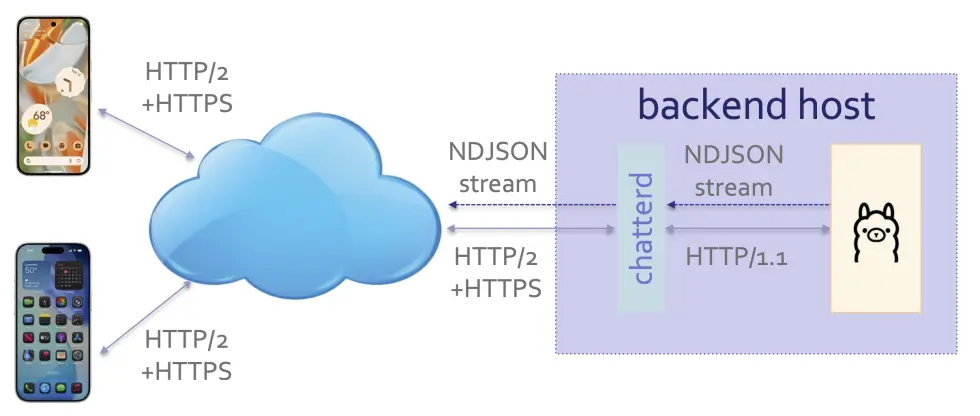

Ollama only accepts HTTP/1.1 connections and, by default, only from the same host it is running on.

Mobile platforms, on the other hand, require published apps to connect over HTTP/2, encrypted (HTTPS).

In this course, we create our own back-end server, which we called chatterd (d for &lquo;daemon”, a

long-running background Unix process that acts as a server), not only to serve as a simple proxy

to bridge HTTP/2+HTTPS to HTTP/1.1, but also to act as a gateway so that your Ollama instance is

not running exposed to the whole Internet (though you should not rely on it as any kind of permanent

security measure!).

chatterd serving as HTTP/2 proxy for Ollama

Preparing certificates for HTTPS

Apple required apps to use HTTPS, the secure version of HTTP. Android has followed suite and defaulted to blocking all cleartext (HTTP) traffic.

To support HTTPS, we first need a public key signed by a Certification Authority (CA). Obtaining

a certificate from a legitimate CA requires that your server have a fully qualified domain name (FQDN),

such as www.eecs.umich.edu, which would require extra set up. Instead, we opt to generate our own

certificate, which can only be used during development, good enough for the course.

Start by installing wget so that we can grab the configuration file, selfsigned.cnf, which

we will use to generate a certificate and its corresponding private key:

server$ sudo apt install wget

server$ hash -r

server$ wget https://reactive.eecs.umich.edu/asns/selfsigned.cnf

Open selfsigned.cnf:

server$ vi selfsigned.cnf

When asked to open and/or edit a file on your back-end server, use your favorite editor. In this and all subsequent tutorials, we will assume

vi(orvimornvim) because it has the shortest name 😊. You can replaceviwith your favorite editor; for example,nanohas on-screen help and may be easier to pick up. See cheatsheet for a list ofvi’s commands.

search for the string YOUR_SERVER_IP and replace it with yours:

extendedKeyUsage = serverAuth

# 👇👇👇👇👇👇👇

subjectAltName = IP:YOUR_SERVER_IP # replace YOUR_SERVER_IP with yours

Can I use DNS instead of IP?

If your server has a fully qualified domain name (FQDN, e.g., reactive.eecs.umich.edu and

not the public DNS AWS/GCP assigned you), you can use it instead, tagging it as DNS

instead of IP in subjectAltName field above. With your IP address in the subjectAltName,

you can only access your server using its IP address, not by its FQDN, and vice versa.

Now create a self-signed key-certificate pair with the following command; here also,

replace YOUR_SERVER_IP in the command line with yours:

server$ openssl req -x509 -newkey rsa:4096 -sha256 -keyout /home/ubuntu/reactive/chatterd.key -out /home/ubuntu/reactive/chatterd.crt -days 100 -subj "/C=US/ST=MI/L=AA/O=UM/OU=CSE/CN=YOUR_SERVER_IP" -config selfsigned.cnf -nodes

# replace YOUR_SERVER_IP with yours

We will use the generated key and certicate files later.

Can I use mkcert instead?

Instead of generating a self-signed certificate, you could use the tool mkcert

to create a local Certification Authority (CA) and then generate certificates signed by that local CA.

With self-signed certificates, you must tell your device OS to trust each and every one of your self-signed certificates, one at a time. If you have a local CA, you only need to tell your device OS to trust your local CA’s root certificate, then all certificates generated by this local CA will be trusted by your device OS. The process to get your device OS to trust your self-signed certificate or the local CA’s root certificate is the same for both iOS and Android. If you need to trust multiple server cerficates, then installing a local CA’s root certificate is more convenient. However, trusting a root CA’s certificate means you trust all certificates generated by that root, which seems to be a bit more vulnerable than just trusting one self-signed certificate. Since we are running only one server in this course, we’ve opted to use a single self-signed certificate.

Web server framework and chatterd

We provide instructions to set up the back-end server using different back-end stacks.

We will call our back-end server chatterd, regardless of the back-end stack you choose.

![]() Note: in this and all subsequent tutorials and projects, we will assume

your folders/directories are named using the “canonical” names listed here. For example,

we will always refer to the directory where we put the back-end source code as

Note: in this and all subsequent tutorials and projects, we will assume

your folders/directories are named using the “canonical” names listed here. For example,

we will always refer to the directory where we put the back-end source code as ~/reactive/chatterd.

If you prefer to use your own names, you’re welcome to do so, but be aware that you’d

have to map your naming scheme to the canonical one in all the tutorials—plus we may

not be able to grade your tutorials correctly, requiring back and forth to sort things out.

chatterd

Please click the relevant link to setup the chatterd server with llmprompt API

using the web framework of your choice:

Go | Python | Rust | TypeScript

and return here to resume the server setup once you have your web framework running.

Which back-end stack to use?

🔸 Go is a popular back-end language due to its learnability. We considered

several Go-based web frameworks and ended up using the Echo web

framework, which is built on the net/http web

server from Go’s standard library. Personally, I find Go awkward to use; see,

for example, I wish Go were a Better Programming Language.

🔸 JavaScript is familiar to web front-end developers. TypeScript

introduces static type checking to JavaScript. Node.js was the

first runtime environment that enables use of JavaScript outside a web browser, to build

back-end applications. Subsequently, Deno (written in Rust) and

Bun (written in Zig) have come on the scene, with better runtime

performance and smoother developer experience; unfortunately, neither fully supports HTTP/2

at the time of writing. We have chosen the popular Express web framework

running on Node.js, with TypeScript code compiled with tsc.

JavaScript documentation and community often assume code running in a web browser,

resulting in explanations that can be disorienting to native mobile developers.

JavaScript stack relies heavily on third-party libraries. Each library developer

may use JavaScript in ways that are just so slightly different from the others’.

JavaScript being an interpreted language also makes it slower than compiled languages.

For example, the TypeScript compiler achieved 10x performance increase by switching

from JavaScript to Go [1, 2].

🔸 Python: If you plan to use any ML-related libraries in your project’s back end,

a Python-based stack could mean easier integration. We considered several web frameworks

that support asynchronous Python operations and ended up choosing Starlette.

Starlette commonly runs on the uvicorn web server, created by the same author. To

support HTTP/2 however, we run Starlette on granian.

This is made possible by Python’s Asynchronous Standard Gateway Interface (ASGI),

which was expressedly designed to allow plug-and-play between various modules in the Python stack.

Interestingly, Granian uses hyper, written in Rust, as the

underlying web server. If you decide to use the Python stack, be aware that it has the

worst performance compared to the other options, by a large distance. Python’s reliance

on indentation to delimit code blocks makes it bug prone. A Python IDE that uses a Language

Server to watch over the use of the correct amount of indentations is highly recommended.

🔸 Rust does static type checking and memory-ownership data-flow analysis, resulting

in a language that allows you to write safe and performant code, two goals that are hitherto

considered antithetical. Alone amongst the language choices here, Rust does not rely on

runtime garbage collection for memory management. We use the axum web framework,

built on the hyper web server and the tokio

asynchronous stack. Rust-based axum-hyper stack consistently outperforms all the other options

here, by an order of magnitude. Should you contemplate using the Rust stack, however, be forewarned

that Rust does have a reputation for being hard to learn and frustrating to use,

mainly due to its reliance on its type system to enforce memory ownership, scoped lifetime, and safe concurrency.

The advantage is that the compiler can check and enforce these ownership, lifetime, and safe

access requirements statically at compile time. The frustration comes when your use of existing

APIs does not meet one or more of these requirements and you don’t know how to satisfy them—often,

you didn’t even know there’s a need for these requirements in the first place. A Rust IDE that

uses a Language Server for type inference can be immensely helpful, or rather, indispensable,

in resolving type mismatches.

![]() Should you decide to switch from one back-end stack to another during the term, be sure

to disable the previous one completely before enabling the new one or you won’t be able to start

the new one due to the HTTP/S port already in use:

Should you decide to switch from one back-end stack to another during the term, be sure

to disable the previous one completely before enabling the new one or you won’t be able to start

the new one due to the HTTP/S port already in use:

server$ sudo systemctl stop chatterd

server$ sudo systemctl daemon-reload

server$ sudo systemctl start chatterd

Evaluating web frameworks

Web frameworks in this course serve mostly simple CRUD operations. Our needs are thus modest, yet the need to support mobile apps, on both the Android and iOS platforms, brings its own requirements: the front-ends are not web browsers and do not understand JavaScript. The framework must support HTTPS, required by both iOS and Android. The apps in our tutorials are multi-modal and the web framework must support both download and upload of text, audio, images, and video. Some frameworks can support video transfer when communicating with one mobile platform but not the other. Others can transfer video on HTTP/1.1, but not on HTTP/2, even when they otherwise purportedly support HTTP/2. We require HTTP/2 in course projects that transmit Server-Sent Events (SSEs). We further require the following from web frameworks:

- integration with PostgreSQL with connection pool,

- handling of missing or duplicated trailing slash in URL endpoints,

- serving dynamically added static files from a designated directory,

- generating url for dynamically added static files,

- specifying upload size limit.

We limit our back-end stack choices to those supporting asynchronous operations–hence not Flask

nor Django for Python, for example. Finally, given our modest needs, we consider only frameworks

with minimal API surface—so-called microframeworks, eschewing more comprehensive,

“batteries included,” ones that integrate object-relational mapper (ORM) for database access,

OAuth and JSON web token (JWT) for authentication/authorization, auto-generated interactive

documentation (OpenAPI), automatic collection of instrumentation and logging (telemetry) data

(OpenTelemetry), etc. While such frameworks are useful for building and maintaining enterprise-level

production site, they are usually also more opinionated and have a larger API surface.

This table comparing

microframework Starlette (our choice for Python) against the more

comprehensive and opinionated Starlite illustrates the differences.

(For further discussions on micro- vs. batteries-included frameworks see also, Which Is the

Best Python Web Framework: Django, Flask, or FastAPI?

and Flask as April Fools’ joke (slides).)

We found the TechEmpower Web Framework Benchmarks (TFB) to be a decent place to get to know what frameworks are available. Not all frameworks participate in the benchmark: three of the ones we’ve evaluated here are not, and it’s likely there are more we’re not aware of. As with most benchmarks, TFB entries do not always reflect common, casual usage of web frameworks, and its results should be taken with a grain of salt. Discounting those entries intended only to show how the benchmark can be gamed, we nevertheless found it useful to compare the relative performance of the frameworks we do consider. The linked table below lists the web frameworks, with their web server pairing, that we have either considered (C), evaluated (E), implemented (I), or finally deployed (D). For those we do not deploy, the notes column records the first road-block we encountered, stopping us from considering the entry further. A couple of those not deployed we’re watching (W) for further development.

Testing llmprompt API

There are several ways to test HTTP APIs. You can use a REST API cient with a graphical interface or you can use a command-line tool.

![]() First, your

First, your chatterd must be running on your back-end host.![]() If it’s not running, there is no server to test.

If it’s not running, there is no server to test.

with a REST API client

To test HTTP APIs graphically, you could use a REST API client such as Postman,

Insomnia, or, if you use VSCode, the

EchoAPI

plugin. On Android or iOS device and emulator/simulator, you can use Teste - API,

downloadable from both Apple AppStore and Google PlayStore.

Postman

The instructions for Postman in this and subsequent tutorials are for the desktop version. If you’re proficient with the web version, you can use the web version.

-

First for

Settings > General > Request > HTTP versionchooseHTTP/2on the drop down menu. Then three items down, disableSettings > General > SSL certificate verification. We use self-signed certificate that cannot be verified with a trusted authority. -

Then in the main Postman screen, next to the

Overviewtab, click the+tab. You get a newUntitled Requestscreen. You should seeGETlisted underUntitled Request. -

Click on

GETto show the drop down menu and selectPOST. -

Enter

https://YOUR_SERVER_IP/llmprompt/in the field next toPOST. -

Under the server URL you entered, there’s a menu with

Bodyas the fourth element. Click onBody. -

You should see a submenu under

Body. Click onraw. -

At the end of the submenu, click on

TEXTand replace it withJSONin the drop down menu. -

You can now enter the following in the box under the submenu:

{

"model": "gemma3:270m",

"prompt": "howdy?",

"stream": true

}

and click the big blue Send button.

If everything works as expected, the bottom pane of Postman should say, to the right of its menu line,

200 OK, and the pane should display NDJSON, with the first one looking something like:

{

"model": "gemma3:270m",

"created_at": "2025-07-23T21:10:23.893760402Z",

"response": "How",

"done": false

}

the "created_at" field will be different and the "response" field may also be different for you.

A new REST API client called Apidog can auto-merge the return stream and display it as a scrolling text. Unfortunately as of the time of writing it is not compatible with our Go/echo and TypeScript/express back ends. I have reported the issue to the company but so far no resolution.

with a command-line tool

For all of the below, if you’re running a server without HTTPS, replace https with http throughout.

curl

To test HTTP POST requests with curl:

laptop$ curl -X POST -H "Content-Type: application/json" -d '{ "model": "gemma3:270m", "prompt": "howdy?", "stream": true }' --insecure https://YOUR_SERVER_IP/llmprompt/

The --insecure option tells curl not to verify your self-signed certificate.

HTTPie

You can also use HTTPie instead of curl to test on the command line:

laptop$ echo '{ "model": "gemma3:270m", "prompt": "howdy?", "stream": true }' | http --verify=no POST https://YOUR_SERVER_IP/llmprompt/

# output:

HTTP/1.1 200 OK

content-type: application/x-ndjson

date: Wed, 23 Jul 2025 21:29:28 GMT

transfer-encoding: chunked

{"model":"gemma3:270m","created_at":"2025-07-23T21:29:28.409391556Z","response":"How","done":false}

# and a few more lines . . . .

The --verify=no option tells HTTPie not to verify your self-signed certificate.

Automatic chatterd restart

The following steps apply to all variants of the web server. The only difference is in

the ExecStart entry of the chatterd.service file. Go and Rust have the same entry.

Python and TypeScript each has a different entry. I have all options listed but commented

out in the file below. You need to uncomment the line(s) corresponding to your framework

of choice.

Once you have your server tested, to run it automatically on system reboot or on failure, first create the service configuration file:

server$ sudo vi /etc/systemd/system/chatterd.service

then put the following in the file:

[Unit]

Description=EECS Reactive chatterd

After=network.target

StartLimitIntervalSec=0

[Service]

Type=simple

Restart=on-failure

RestartSec=1

User=root

Group=www-data

WorkingDirectory=/home/ubuntu/reactive/chatterd

# >>>>>>>>>>>>>>>>> Go or Rust: uncomment the following line: <<<<<<<<<<<<<<<<<

#ExecStart=/home/ubuntu/reactive/chatterd/chatterd

# >>>>>>>>>>>>>>>>> TypeScript: uncomment the following two lines: <<<<<<<<<<<<<<<<<

#Environment=NODE_ENV=production

#ExecStart=/usr/local/bin/pm2-runtime start -i 3 main.js

# >>> Sometimes pm2-runtime is installed in /usr/bin. Double check your path with:

# >>> server$ which pm2-runtime

# >>> and use the output in the ExecStart above.

# >>>>>>>>>>>>>>>>>>> Python: uncomment the following line: <<<<<<<<<<<<<<<<<<<

#ExecStart=/home/ubuntu/reactive/chatterd/.venv/bin/granian --host 0.0.0.0 --port 443 --interface asgi --ssl-certificate /home/ubuntu/reactive/chatterd.crt --ssl-keyfile /home/ubuntu/reactive/chatterd.key --access-log --respawn-failed-workers --respawn-interval 1.0 --workers 3 --workers-kill-timeout 1 main:server

[Install]

WantedBy=multi-user.target

To test the service configuration file, run:

server$ sudo systemctl daemon-reload

server$ sudo systemctl start chatterd

server$ systemctl status chatterd

# first 3 lines of output:

● chatterd.service - EECS Reactive chatterd

Loaded: loaded (/etc/systemd/system/chatterd.service; disabled; vendor preset: enabled)

Active: active (running) since Thu 2024-10-15 01:28:56 EDT; 2min 30s ago

. . . 👆👆👆👆👆👆👆👆👆👆

The last line should say, Active: active (running).

To have the system restart automatically upon reboot, run:

server$ sudo systemctl enable chatterd

server$ systemctl status chatterd

# first 2 lines of output:

● chatterd.service - EECS Reactive chatterd

Loaded: loaded (/etc/systemd/system/chatterd.service; enabled; vendor preset: enabled)

. . . 👆👆👆👆👆

The second field inside the parentheses in the second line should now say “enabled.”

![]() For each tutorial, leave your

For each tutorial, leave your chatterd running until you have received your tutorial grade!

To view chatterd’s console output, run:

server$ sudo systemctl status chatterd

To view continuous status updates from chatterd, run:

server$ sudo journalctl -fu chatterd

![]() TIP:

TIP:

server$ sudo systemctl status chatterd

is your BEST FRIEND in debugging your server. If you get an HTTP error code 500 Internal Server Error

or if you just don’t know whether your HTTP request has made it to the server, first thing you do is run

sudo systemctl status chatterd on your server and study its output, including any error messages and

debug printouts from your server.

Everytime you rebuild your Go or Rust server or make changes to either of your TypeScript or Python files,

you need to restart chatterd:

server$ sudo systemctl restart chatterd

If you change the /etc/systemd/system/chatterd.service configuration file, run:

server$ sudo systemctl daemon-reload

before restarting your chatterd.

To turn off auto restart:

server$ sudo systemctl disable chatterd

![]() WARNING: You will not get full credit if your front end is not set up to work with your back end!

WARNING: You will not get full credit if your front end is not set up to work with your back end!

That’s all we need to do to prepare the back end. Before you return to work on your front end, wrap up your work here by submitting your files to GitHub.

Submitting your back end

We will only grade files committed to the main branch. If you use multiple branches,

please merge them all to the main branch for submission.

Navigate to your reactive folder:

server$ cd ~/reactive/

Run:

server$ cp /etc/systemd/system/chatterd.service chatterd/

Then run:

server$ git add chatterd

You can check git status by running:

server$ git status

You should see the newly added files.

Commit changes to the local repo:

server$ git commit -am "llmprompt back end"

and push your chatterd folder to the remote GitHub repo:

server$ git push

If git push failed due to changes made to the remote repo by your tutorial

partner, you must run git pull first. Then you may have to resolve any

conflicts before you can git push again.

![]() Go to the GitHub website to confirm that your back-end files have

been uploaded to your GitHub repo.

Go to the GitHub website to confirm that your back-end files have

been uploaded to your GitHub repo.

You can now return to complete the front end: Android | iOS.

References

Setup

- Ubuntu setup

- GCP Instructions to set up ssh access with public key pair.

chmodin WSL- Creating a Linux service with systemd

Security

- What is HTTPS

- Everything about HTTPS and SSL

- New self-signed SSL Certificate for iOS 13

- Self-Signed SSL Certificate for Nginx

- Connecting mobile apps (iOS and Android) to backends for development with SSL

- How to do the CHMOD 400 Equivalent Command on Windows

Ollama

- Developing Generative AI Applications in Go with Ollama (and Tiny models)

- Example Ollama models

- Awesome SLMs

- Ollama model library most up-to-date <!– Generating JSON with an LLM [with “format”]

- Ollama API

- How to Use Ollama

- Independent analysis of AI

LLMs

- A Very Gentle Introduction to Large Language Models without the Hype

- Large language models, explained with a minimum of math and jargon

- How computers got shockingly good at recognizing images

- Few-Shot Prompting

- A Deep Dive into Building Enterprise grade Generative AI Solutions

| Prepared by Tiberiu Vilcu, Wendan Jiang, Alexander Wu, Benjamin Brengman, Ollie Elmgren, Luke Wassink, Mark Wassink, Nowrin Mohamed, Chenglin Li, Xin Jie ‘Joyce’ Liu, Yibo Pi, and Sugih Jamin | Last updated December 22nd, 2025 |