Tutorial: llmPrompt

Course Schedule

Image: Live Chat Symbol Icon, AI Tools Icon Set, ChatGPT

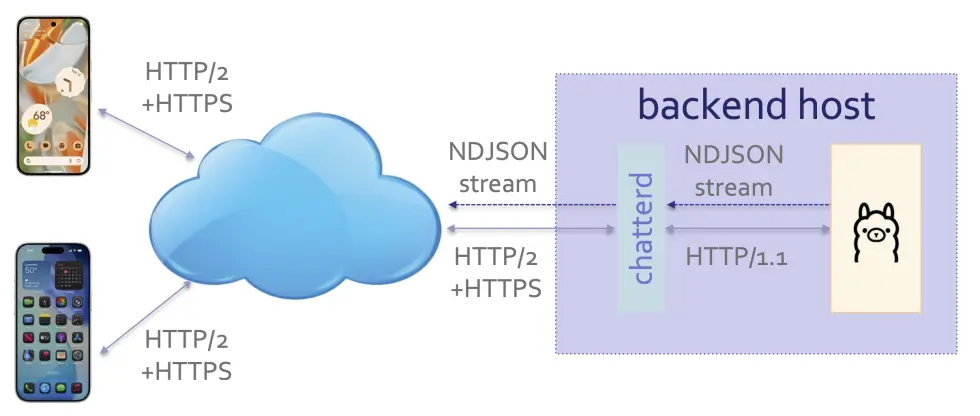

We will build an app called Chatter and endow it with various capabilities in

each tutorial. In this tutorial, Chatter allows user to post prompts to Ollama

running on a back-end server. Due to discrepancies between Ollama’s networking

capabilities and the networking requirements of mobile platforms, we need a

back-end server of our own, which we called chatterd, to serve as proxy to

bridge between the two. The Chatter mobile front end displays Ollama’s streamed response

as soon as each element arrives. Treat messages you send to chatterd and Ollama

as public utterances with no reasonable expectation of privacy.

chatterd serving as HTTP/2 proxy for Ollama

About the tutorials

This tutorial may be completed individually or in teams of at most 2. You can partner differently for each tutorial.

We don’t assume any mobile nor back-end development experience on your part. We will start by introducing you to the front-end integrated development environment (IDE) and back-end development tools.

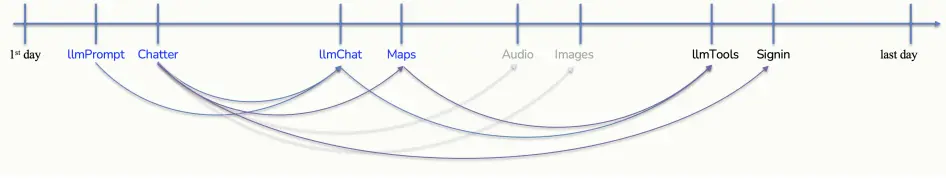

Are the tutorials cumulative?

Tutorials 1 (llmPrompt) and 2 (Chatter) form the foundation for all subsequent

tutorials. The back end you build from tutorials 1 and 2 will be used throughout the

term. Tutorial 5 (llmTools) further builds on tutorials 3 (llmChat) and 4 (Maps).

So you want to keep a clean zipped copy of each tutorial for subsequent uses and modifications. Image below summarizes dependencies of latter tutorials on earlier ones.

Tutorial dependencies

Since the tutorials require manual set up of your computing infrastructure, customized to each person or team, including individualized security certificate, we cannot provide a ready-made solution for you to download. Each person or team must have their own mobile scaffolding and infrastructure from tutorials 1 and 2 to complete the latter tutorials and projects. If you run into any difficulties setting up yours, please seek help from the teaching staff early and immediately.

For the ULCS (non-MDE) version of the course, each ULCS project is supported by two tutorials. Tutorials 1 and 2 show how to accomplish the main tasks in Project 1, etc.

The tutorials can mostly be completed by cut-and-pasting code from the specs. However, you will be more productive on homework and projects if you do understand the code. As a general rule of thumb, the less time you spend reading, the more time you will spend debugging.

Objectives

Front end:

- Familiarize yourself with the development environment

- Start learning Kotlin/Swift syntax and language features

- Learn declarative UI with reactive state management (Compose for Android, SwiftUI for iOS)

- Observe the unidirectional data flow architecture

- Learn HTTP GET and POST asynchronous exchange

- Learn JSON serialization/deserialization

- Use reactive UI to display asynchronous events: display each newline-delimitied JSON (NDJSON) streaming element sent by Ollama as they arrive

- Install self-signed certificate on your mobile device

Back end:

- Setup and run Ollama on a back-end server

- Generate self-signed private key and its public key certificate

- Set up an HTTPS server with self-signed certificate

- Introduction to URL path routing

- Use JSON for HTTP request/response to communicate with both Ollama and your front end

- Introduction to Ollama

api/generate - Forward NDJSON streaming response from Ollama to the front end

There is a TODO item at the end of this spec that you must complete as part of the tutorial.

API and protocol handshake

For this tutorial, Chatter has two APIs:

/: uses HTTP GET to confirm that the server is up and running!/llmprompt: uses HTTP POST to post user’s prompt for Ollama’sgenerateAPI as a JSON Object, which thechatterdback end forwards to Ollama. Upon receiving Ollama’s response, the back end also simply forwards the stream to the client.

Using this syntax:

url-endpoint

-> request: data sent to Server

<- response: data sent to Client followed by HTTP Status

The protocol handshake consists of:

/

-> HTTP GET

<- "EECS Reactive chatterd" 200 OK

/llmprompt

-> HTTP POST { model, prompt, stream }

<- { newline-delimited JSON Object(s) } 200 OK

Note: ‘/’ is disabled on

mada.eecs.umich.edu

Data format

To post a prompt to Ollama with the llmprompt API, the front-end client sends

a JSON object consisting of a model field, a prompt field, and a stream

field. The model field holds the name of the model, which in this tutorial we

set to gemma3:270m. The prompt field carries the prompt to Ollama. The stream

field indicate whether Ollama should stream its response or to batch and send it

in one message. For example:

{

"model": "gemma3:270m",

"prompt": "howdy?",

"stream": true

}

Upon receiving this JSON object, chatterd simply forwards it to Ollama. If

streaming is turned on, the reply from Ollama is in the form of a newline-delimited

JSON (NDJSON) stream, otherwise it responds with

a single JSON Object. The JSON objects carried in Ollama’s response is specified in

the Ollama documentation for api/generate.

Either way, we only forward the response field of Ollama’s response. We also do

not accumulate Ollama’s reply to be returned as a single response at completion.

You can use LLM model gemma3:270m on Ollama. This model runs on less than 600 MB of

memory, so should fit into a *-micro AWS/GCP instance. The sample back end

on mada.eecs.umich.edu can, in addition, run Ollama models gemma3 (a.k.a.

gemma3:4b) for a more satisfying interaction.

Specifications

There are TWO pieces to this tutorial: a front-end mobile client and a back-end server. For the front end, you can build either for Android with Jetpack Compose or for iOS in SwiftUI. For the back end, you can choose between these web microframeworks: Echo (Go), Express (Typescript), Starlette (Python), or axum (Rust).

You only need to build one front end, AND one of the alternative back-ends.

Until you have a working back end of your own, you can use mada.eecs.umich.edu to test

your front end. To receive full credit, your front end MUST work with both

mada.eecs.umich.edu and your own back end, with model gemma3:270m.

IMPORTANT: unless you plan to learn to program both Android and iOS, you should do the tutorials on the same platform as that of your projects.

TODO:

WARNING: We found that an HTTP(S) server with the / API endpoint seems

to attract more random probes from sundry hosts on the Internet.

Disable the / API endpoint in your back-end server after you’re

done testing your setup, before you turn in your submission. With the / API endpoint

disabled, trying to access it using a REST API client or the Chrome browser should

result in HTTP error code 404: Not Found (Safari may simply show a blank page instead

of the 404 error code).

| Prepared by Sugih Jamin | Last updated: December 22nd, 2025 |