Tutorial: llmTools Backend

Cover Page

DUE Wed, 11/12, 2 pm

You will need the HTTPS infrastructure from the first tutorial,

the PostgreSQL database set up in the second tutorial, and the

appID setup in the llmChat tutorial.

Install updates

Remember to install updates available to your Ubuntu back end. If N in the following notice you see when you ssh to your back-end server is not 0,

N updates can be applied immediately.

run the following:

server$ sudo apt update

server$ sudo apt upgrade

Failure to update your packages could lead to your solution not performing at all, with no warning that it is because you haven’t updated, and also makes you vulnerable to security hacks.

Any time you see *** System restart required *** when you ssh to your server, immediately run:

server$ sync

server$ sudo reboot

Your ssh session will be ended at the server. Wait a few minutes for the system to reboot before you ssh to your server again.

Tool calling workflow

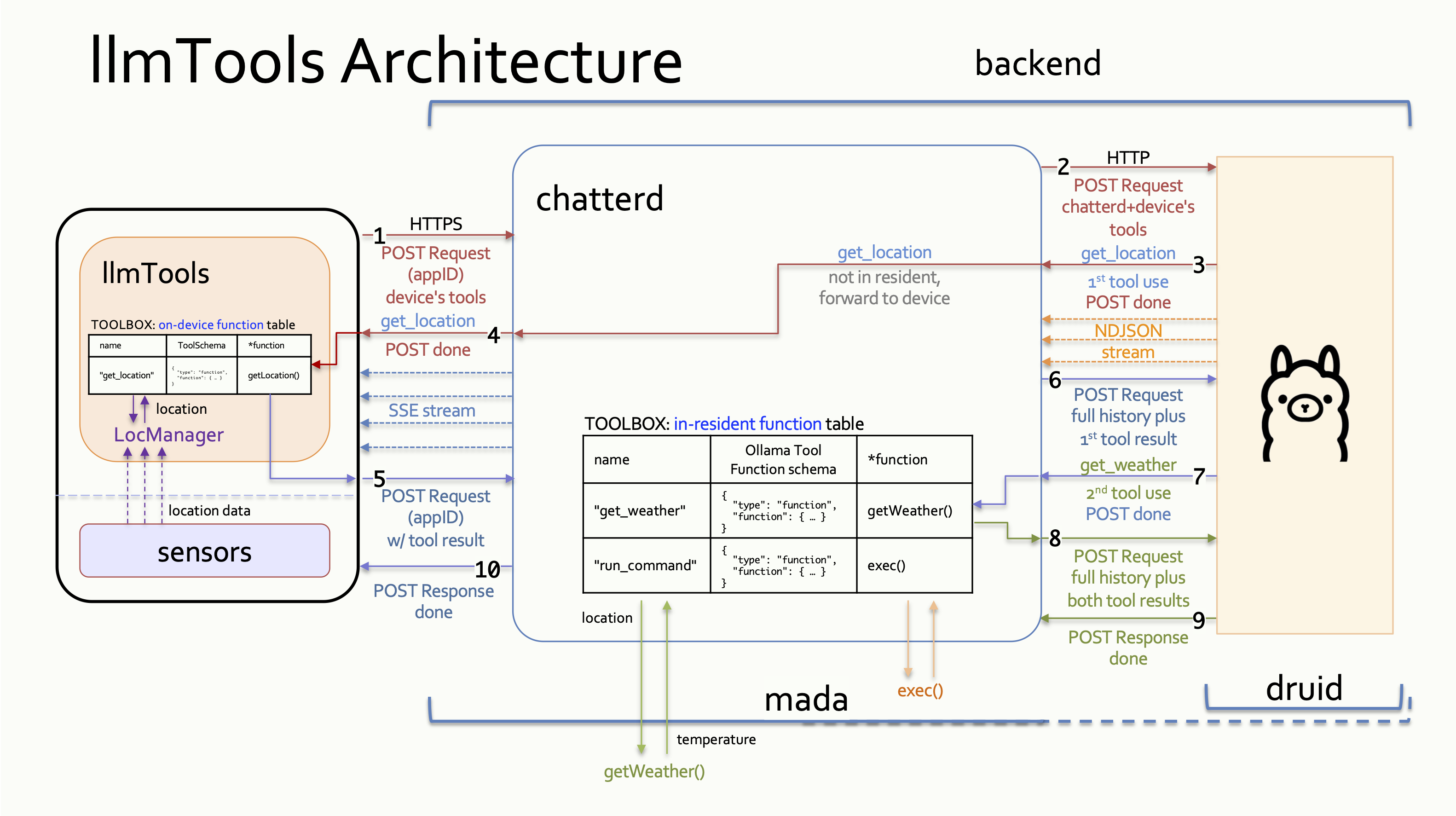

In addition to providing all the capabilities of the llmChat tutorial’s backend, we

add tool calling infrastructure to chatterd in this tutorial. Ollama is a stateless

server, meaning that it doesn’t save any state or data from a request/response interaction

with the client. Consequently, each link in a chain of multiple tool calls is considered

a separate and standalone interaction. For example a prompt requiring chained tool

calls—first call get_location then call get_weather—is to Ollama three separate

interactions (or HTTP rounds):

- The first interaction carries the prompt and the definition of the two tools.

This round is completed by Ollama’s returning a tool call for

get_location. This initial round is depicted in the figure below as the red communication lines numbered 2 to 3. - The second interaction carries all messages from the first exchange including Ollama’s

completion and the result of the

get_locationtool call. This round is completed by Ollama’s returning the second tool call forget_weather. In the figure, this round is depicted as the purple communication lines numbered 6 to 7. - The third interaction carries all of the above and the result of the

get_weathertool call. This round is completed by Ollama’s returning the combined results of the two tool calls as the final completion of the original prompt. This final round is depicted in the figure as the green communication lines numbered 8 and 9.

Tool-call Handling: chatterd handling resident and non-resident tool calls from Ollama to address, “What is the weather at my location?” prompt.

The initial request from the client carries the original prompt and the get_location tool

definition (1). Upon receiving this request, chatterd tags on the get_weather

tool and forward it to Ollama (2). When Ollama’s response arrives (3), chatterd inspects

the response for any tool call. If there is a tool call for a non-resident tool,

chatterd forwards the call to the frontend (4). This concludes the initial HTTP

request/response interaction.

If the tool exists on the frontend, it returns the result as a new HTTP POST connection (5). If the tool doesn’t exist, the frontend informs the user that Ollama has made an unknown tool call and the full interaction is considered completed.

If the response from Ollama contains a call for a tool resident on the backend, however,

chatterd calls the tool (7) and concludes the ongoing HTTP interaction. Then chatterd

opens a new HTTP POST connection to Ollama to return the result of the resident tool call

(8), “short circuiting” the frontend.

Finally, Ollama assembles the result of the two tool calls and returns a completion to the

original prompt (9), which chatterd forwards to the client (10), completing the final interaction.

From HTTP 1.1 on, the protocol automatically tries to reuse the underlying TCP connections to transfer multiple requests/responses between the same end points, to avoid the overhead of opening new connections. Though incorrect use may still prevent connection reuse.

To implement tool calling on chatterd, please click the relevant link for the web framework

of your choice, and return here afterwards to test your server:

| Go | Python | Rust | TypeScript |

Testing

This is only a limited test of the backend call to the OpenMeteo API and its handling of resident tools. A full test of tool calling with on-device tool calls would have to wait until your frontend is implemented.

As usual, you can use either graphical tool such as Postman or CLI tool such as curl.

/weather API

To test your /weather API, in Postman, point your GET request to

https://YOUR_SERVER_IP/weather with the following Body > raw JSON content:

{

"lat": "42.29",

"lon": "-83.71"

}

and click Send.

The same example using curl:

laptop$ curl -X GET -H "Content-Type: application/json" -H "Accept: application/json" -d '{ "lat": "42.29", "lon": "-83.71" }' https://YOUR_SERVER_IP/weather

/llmtools API for resident tools

You can also test your backend’s handling of resident tools. In Postman, point your

POST request to https://YOUR_SERVER_IP/llmtools with this Body > raw JSON:

{

"appID": "com.apidog:getweather",

"model": "qwen3:0.6b",

"messages": [

{ "role": "user", "content": "What is the weather at lat/lon 42.29/-83.71?" }

],

"stream": true

}

In all cases, you should see something like “Weather at lat: 42.28831, lon: -83.700775 is 50.5ºF” returned to you. (It’s ok for the lat/lon in the result to not match those in the prompt exactly.)

Once your frontend is completed, you can do end-to-end testing, see End-to-end Testing section of the spec.

And with that, you’re done with your backend. Congrats!

![]() WARNING: You will not get full credit if your front end is not set up to work with your backend!

WARNING: You will not get full credit if your front end is not set up to work with your backend!

Everytime you rebuild your Go or Rust server or make changes to either of your JavaScript or Python files, you need to restart chatterd:

server$ sudo systemctl restart chatterd

![]() Leave your

Leave your chatterd running until you have received your tutorial grade.

![]() TIP:

TIP:

server$ sudo systemctl status chatterd

is your BEST FRIEND in debugging your server. If you get an HTTP error code 500 Internal Server Error or if you just don’t know whether your HTTP request has made it to the server, first thing you do is run sudo systemctl status chatterd on your server and study its output, including any error messages and debug printouts from your server.

That’s all we need to do to prepare the back end. Before you return to work on your front end, wrap up your work here by submitting your files to GitHub.

Submitting your back end

We will only grade files committed to the main branch. If you use multiple branches, please merge them all to the main branch for submission.

Navigate to your reactive folder:

server$ cd ~/reactive/

Commit changes to the local repo:

server$ git commit -am "llmtools backend"

and push your chatterd folder to the remote GitHub repo:

server$ git push

If git push failed due to changes made to the remote repo by your tutorial partner, you must run git pull first. Then you may have to resolve any conflicts before you can git push again.

![]() Go to the GitHub website to confirm that your back-end files have been uploaded to your GitHub repo.

Go to the GitHub website to confirm that your back-end files have been uploaded to your GitHub repo.

References

- Sequential Function Calls

- How to Use Ollama for Streaming Responses and Tool Calling

- Streaming responses with tool calling <!– WRONG: DOESN’T INCLUDE TOOLS IN RESPONSE? How to Implement LLM Tool-calling with Go and Ollama

- INCOMPLETE: no return result: “Tool Calling” and Ollama –>

- ollama-mcp MCP Server: The Definitive Guide for AI Engineers

| Prepared by Xin Jie ‘Joyce’ Liu, Chenglin Li, and Sugih Jamin | Last updated October 26th, 2025 |